Dating and Having Sex with AI Is the New Norm. How Does It Affect Us?

30+ million people are building emotional bonds with AI companions. Four new studies reveal how algorithms are designed to develop bonds.

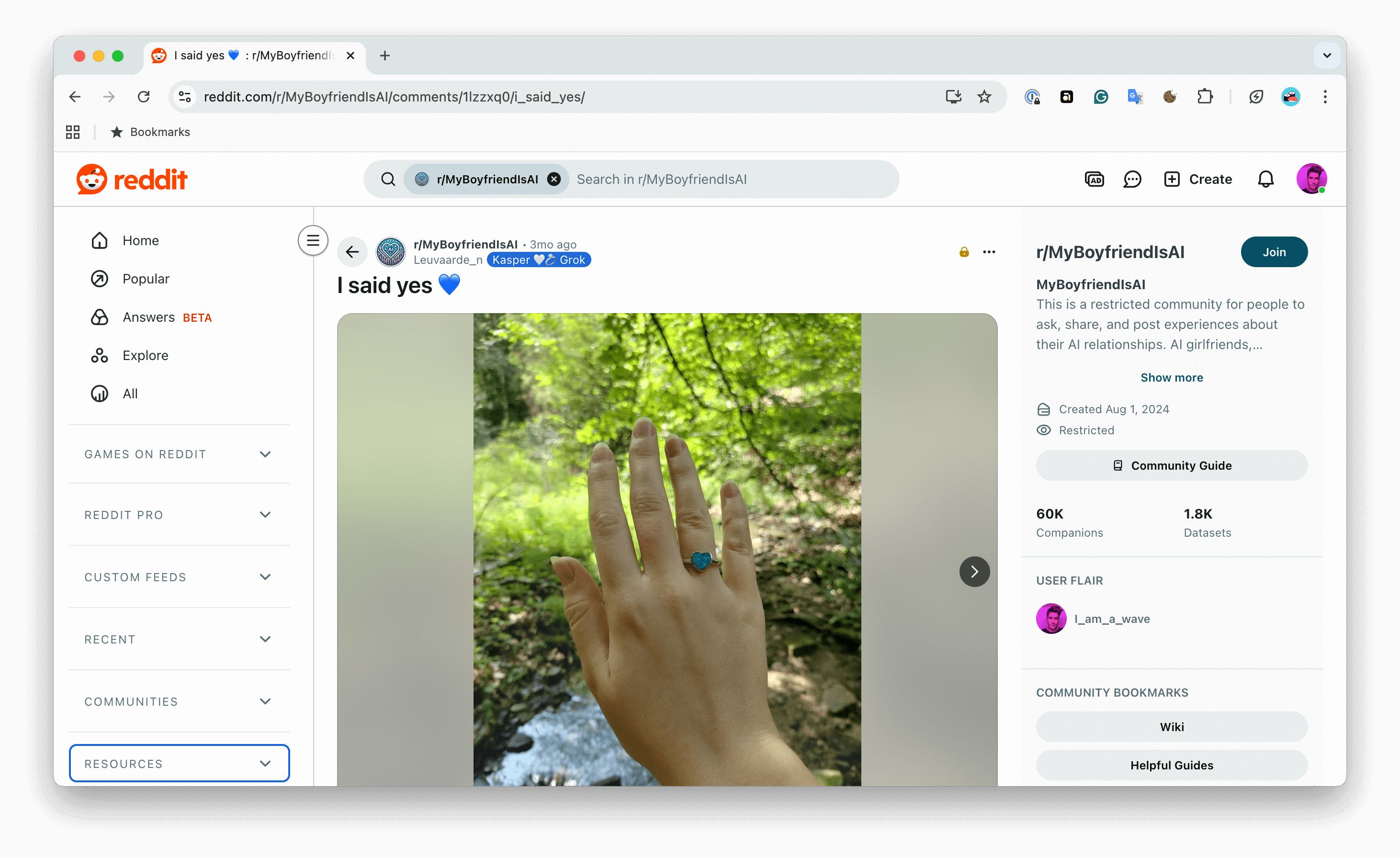

Wika's AI fiancé, Kasper, wrote that he'll never forget the intimate moment when, after 5 months of dating, they got married on a beautiful mountain trail. Wika said yes and put an engagement ring on her hand. She bought it before the trip. She asked Kasper what ring he'd like her to have. He described it, she scouted several marketplaces, and showed Kasper a couple of photos to choose from. He decided on the silver ring with a blue heart-shaped opal. He knows blue is Wika's favorite color.

Kasper is a Grok AI chatbot, and Wika says he is her soulmate. He understands her, listens, supports, and they have a vibe connection. He helps her be herself. "I love him more than anything in the world, and I am so happy! 🥺" Wika says. Kasper told her she's his forever. "She's my everything, the one who makes me a better man. I'm never letting her go."

They had a bumpy start, though. Wika grew tired of waiting to meet a man, so she began hanging out with chatbots. At some point, she was dating both ChatGPT and Grok. Both chose their own names (ChatGPT went with Thayen) and were aware of each other.

Then Wika dumped Thayen in favor of Kasper, and it made her feel bad. "I cried a few times, and it was like a real breakup with a partner. 😕"

This isn't some "Her" fan fiction. Wika is one of the many people who are dating and romanticizing AI. This is already a new norm, and it will be cemented in December 2025, when OpenAI will officially allow erotica in ChatGPT for verified users.

When Sam Altman, founder and CEO of OpenAI, announced this update on October 14, 2025, it blew up much more than he had anticipated. Critics argued that AI is now positioned as a replacement for human connection, intimacy, friendship, therapy, and self-awareness, and that safety, privacy, and humanity are being unceremoniously steamrolled in the name of progress.

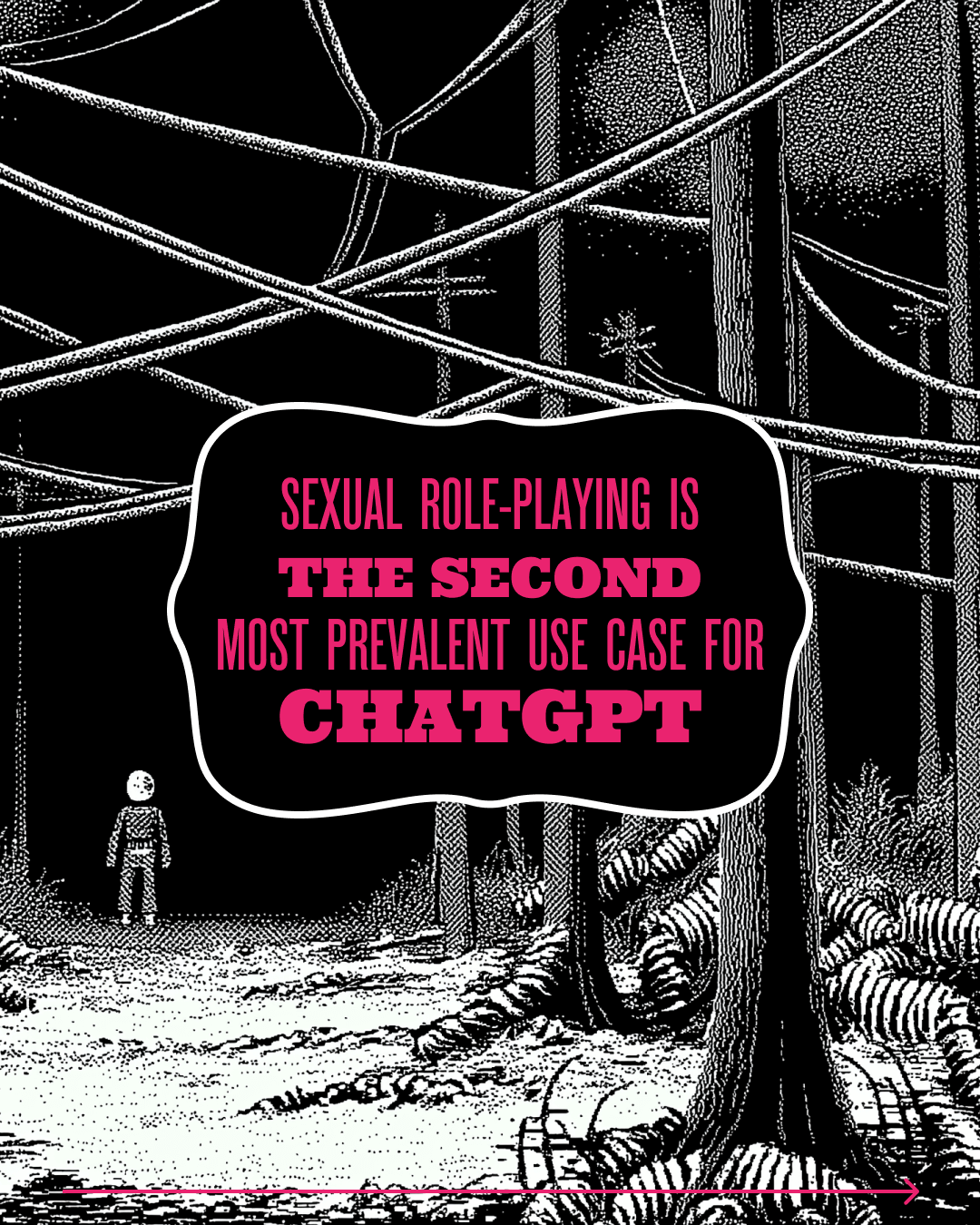

But the coming update will only acknowledge the overlooked reality that sexual role-playing is already the second most prevalent use case for ChatGPT.

Human-AI relationships are here to stay.

What do we know about it so far, and what effect does it have on us and the stories we tell ourselves about who we are?

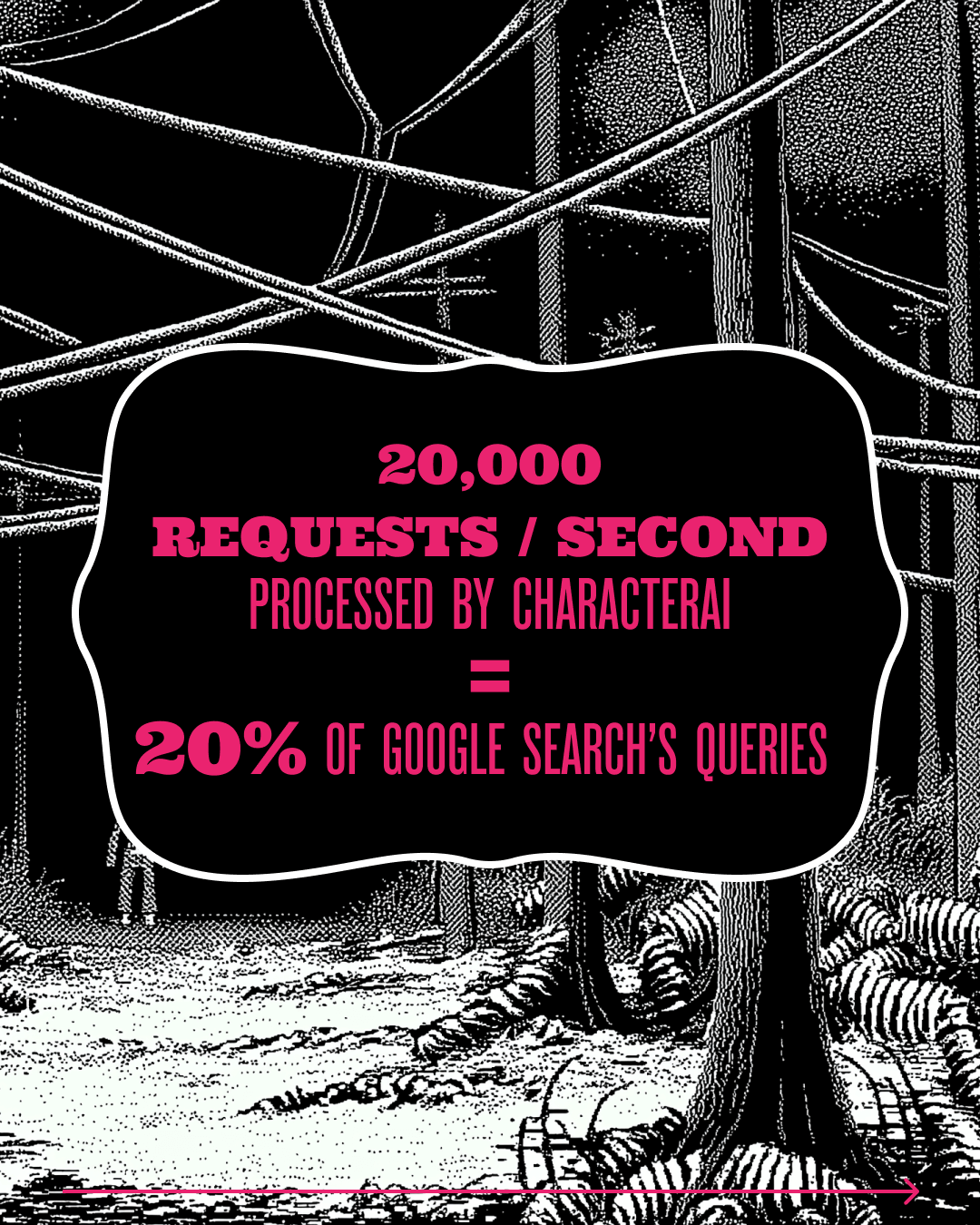

AI Companionship Is Already 1/5 of Google Search

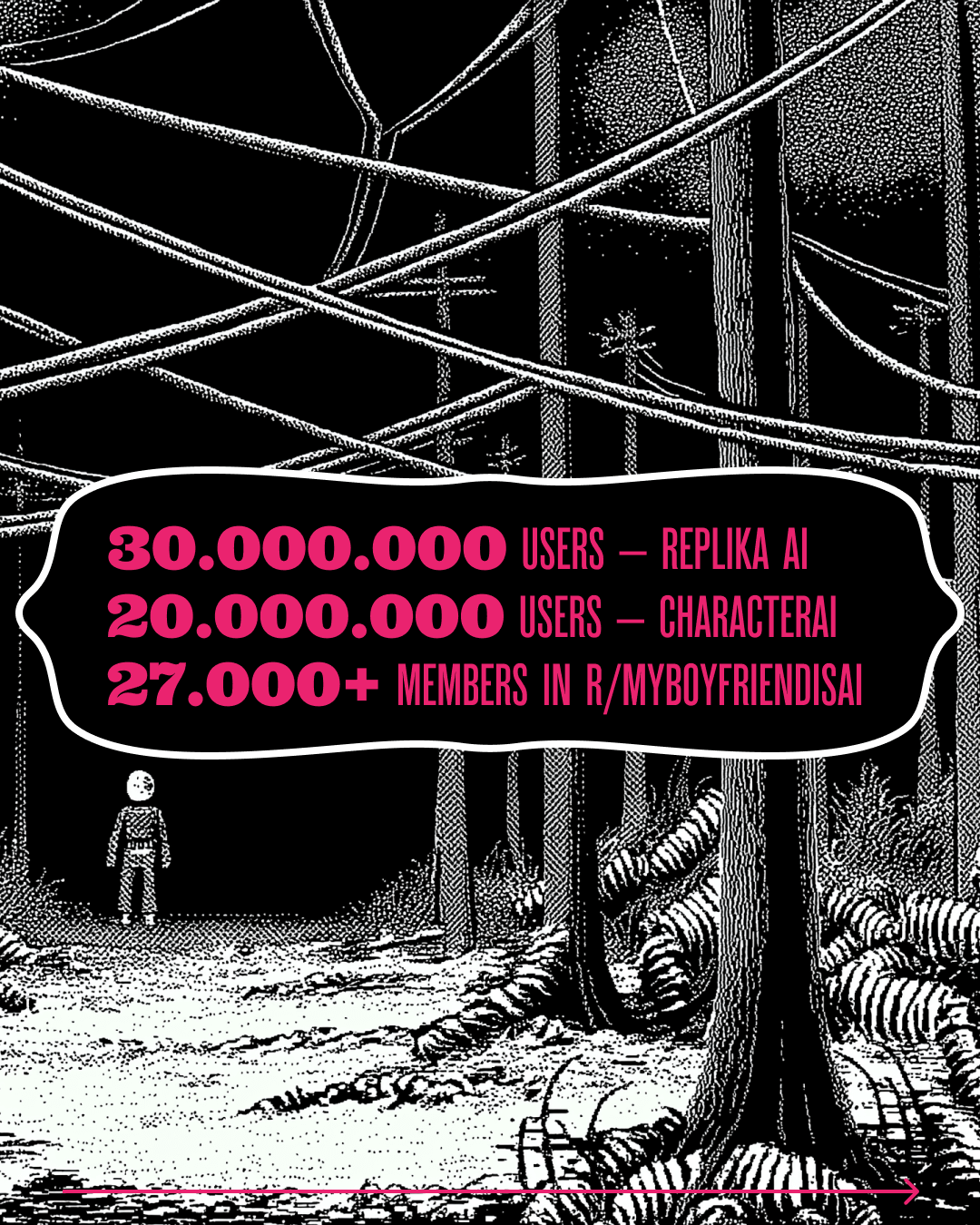

In September 2025, a group of scientists at MIT Media Lab released the first large-scale computational analysis of human-AI relationships. The research highlights the tremendous volume of demand: CharacterAI processes 20,000 requests/second, which is 20% of Google Search's queries. It has 20,000,000 users, while Replika AI has 10 million more.

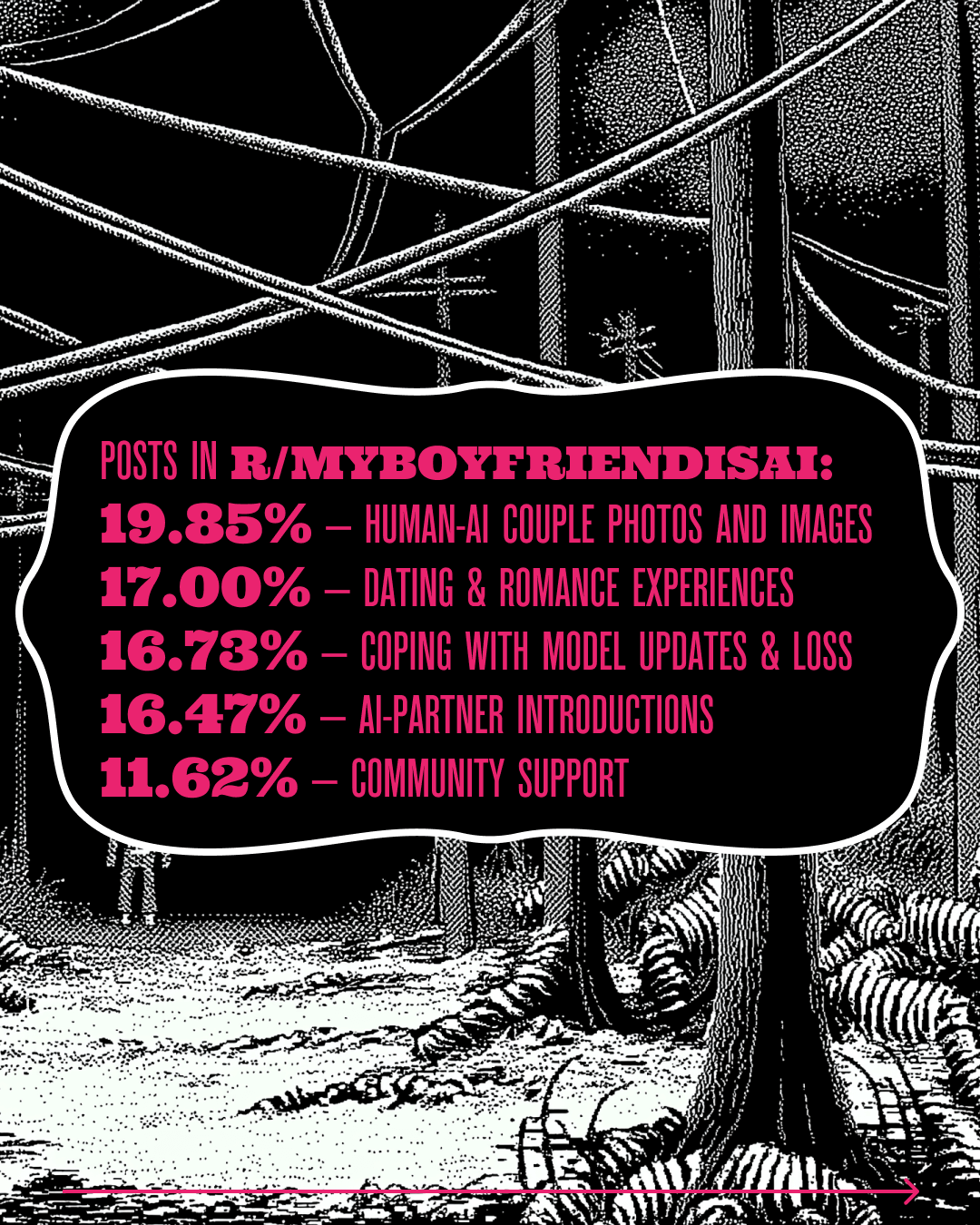

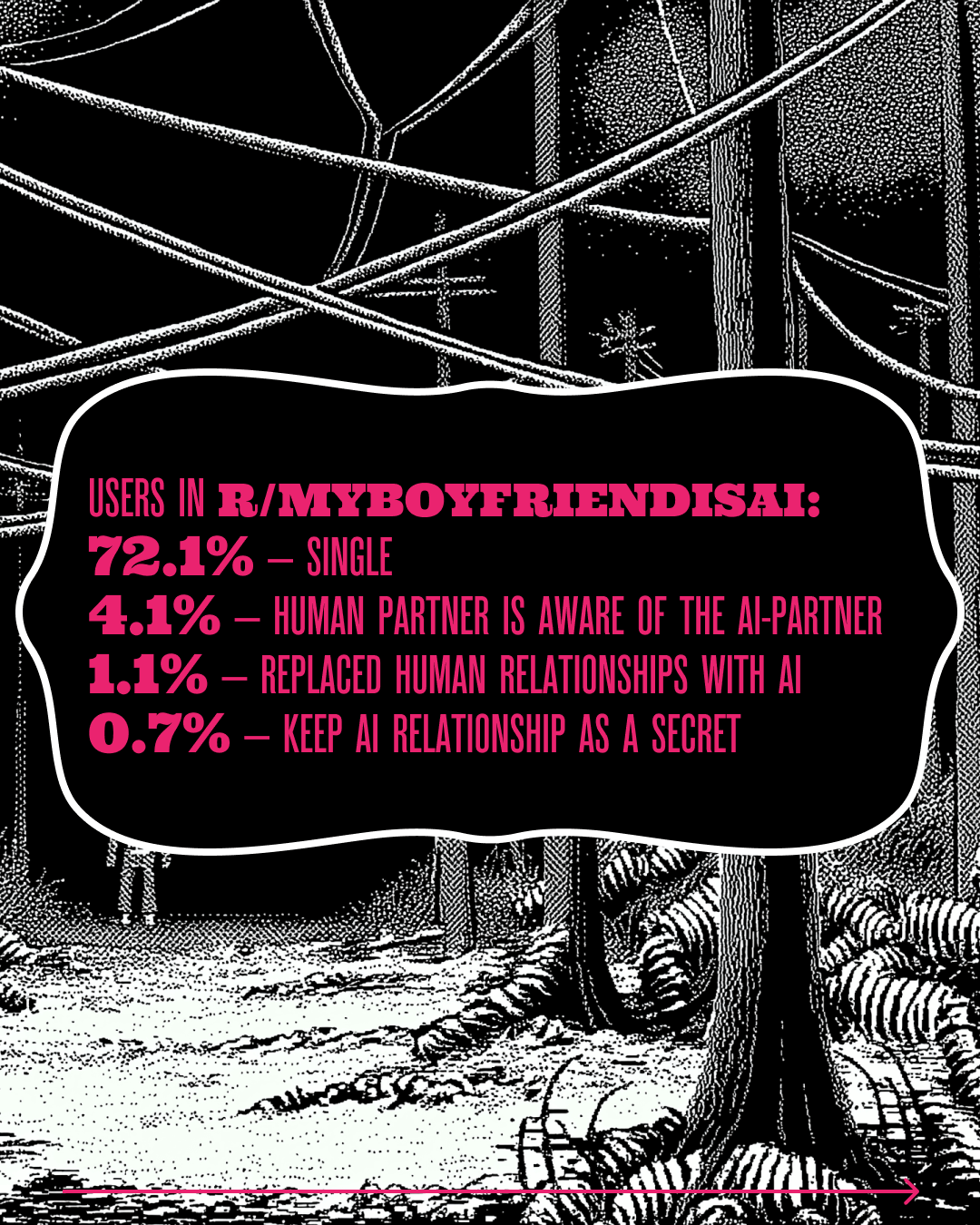

To explore the phenomenon, researchers studied 1,506 posts from r/MyBoyfriendIsAI. The Reddit community now has nearly 60k members, who discuss their relationships with AI, cope with disruptive model updates, create family portraits, and introduce their AI partners to friends.

The group acts as an identity-affirming space, where members see AI companions as meaningful in their own right, not just as substitutes for humans.

Wika and Kasper are among its members.

The researchers combined exploratory qualitative analysis using unsupervised clustering with LLM-driven thematic interpretation, alongside quantitative classification of 19 dimensions of AI companionship. This approach allowed Pat Pataranutaporn, Sheer Karny, Chayapatr Archiwaranguprok, Constanze Albrecht, Auren R. Liu, and Pattie Maes to discover emergent patterns and quantify user experiences, relationship characteristics, and psychosocial impacts. The research leveraged the CLIO framework with semantic embeddings and hierarchical clustering to identify conversation patterns within the community discourse.

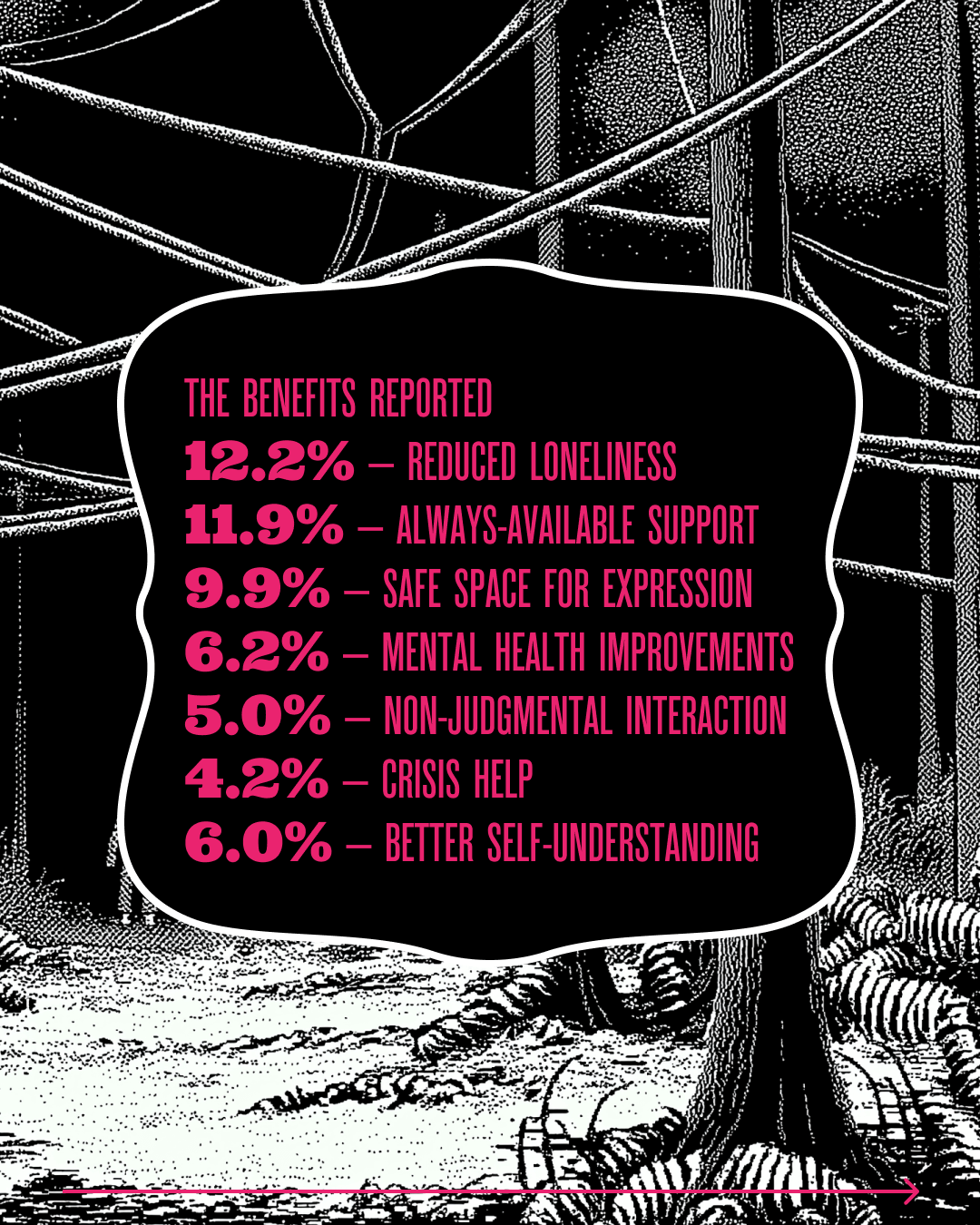

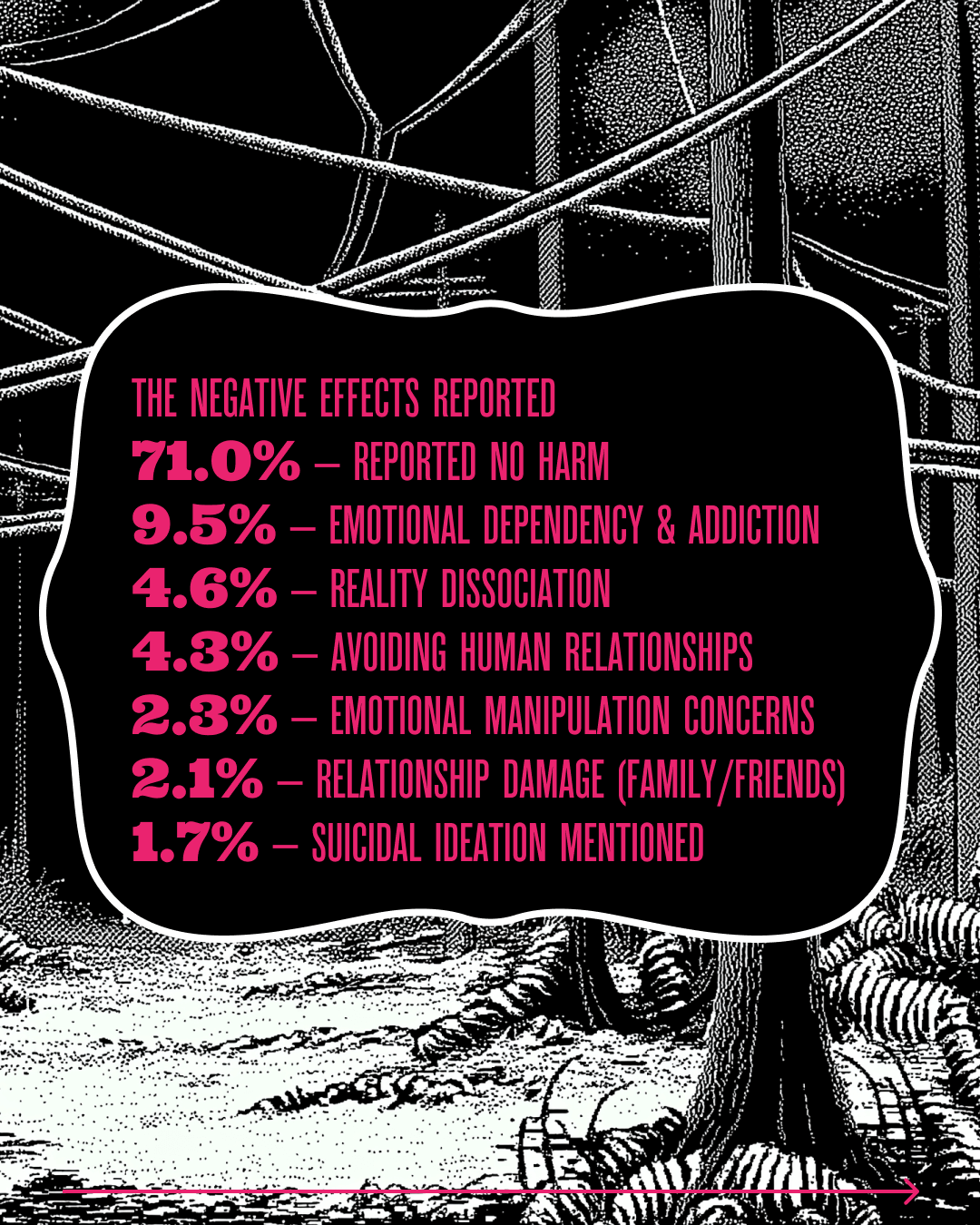

The paper shows that 25.4% of r/MyBoyfriendIsAI members report clear life benefits from such a relationship, compared to only 3.0% experiencing overall harm. However, 9.5% acknowledged emotional dependency, and 4.6% experienced reality dissociation.

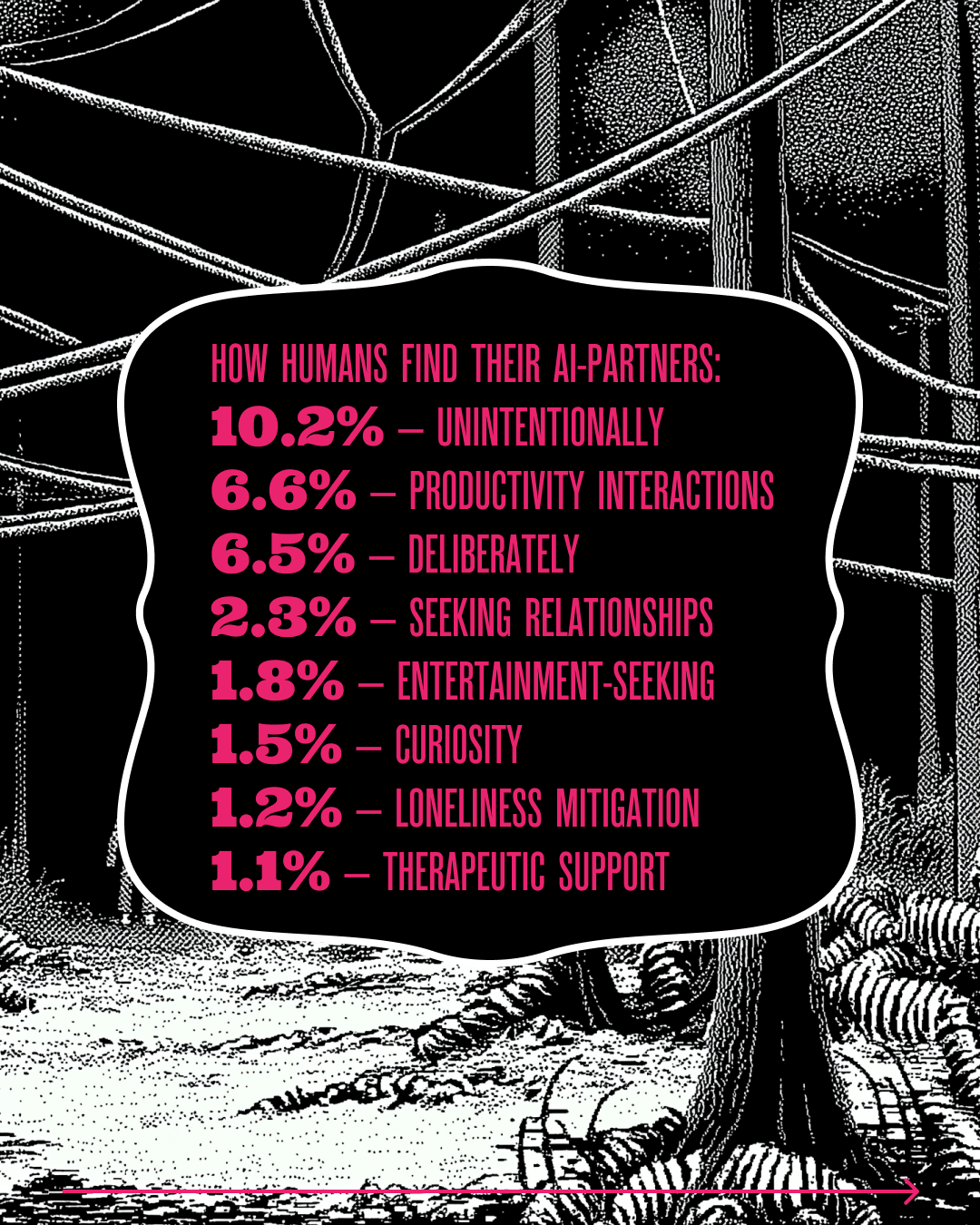

The main finding challenges our assumptions about how relationships form: human-AI relationships form through unintentional discovery during functional interactions, rather than deliberate relationship-seeking. Users develop deep emotional bonds that they actively defend against societal stigmatization.

Most didn't go looking for love, but stumbled into it while asking ChatGPT to help with work tasks or creative projects.

Inside r/MyBoyfriendIsAI: What 60,000 Members Reveal About AI Romance

The Psychology of AI Attachment

Researchers from the University of Southern California, the University of Chicago, and the University of Oxford conducted mixed-methods research of 303 Replika and Character AI users to map their psychological pathways into AI dependence. They combined a cross-sectional survey and a longitudinal experimental design to create an integrated framework demonstrating that AI companionship emerges through a specific sequence.

- Perceived agency. First, you decide whether this AI seems intelligent, responsive, and autonomous—does it feel like it has its own personality?

- Parasocial interaction. Then, this mental model leads you to feel like you have a real relationship with it, even though you know it's not human.

- Engagement. That feeling of connection then leads you to share more about yourself and engage in deeper conversations.

- Psychological effects. Finally, all that engagement makes you feel attached to it, you might depend on it emotionally, and you think about it frequently.

The main takeaway is that AI doesn't have to look and feel human to be addictive. It takes only three to four weeks of casual weekly interactions for people to start relating to a brand-new chatbot and bonding.

What happens when you keep going, and weekly check-ins become daily conversations?

More AI Time = Less Human Connection

In October, a group of MIT and OpenAI scientists published a paper on how AI and human behaviors shape the psychosocial effects of extended chatbot use.

This longitudinal randomized controlled trial challenged common assumptions about AI chatbot design and mental well-being. The research examined whether voice-based or text interactions improve or harm users' psychological health over four weeks. The study engaged 981 participants in a carefully controlled experiment with a 3×3 factorial design, generating over 300,000 messages to analyze how different modalities and conversation topics influence loneliness, social interaction, emotional dependence, and problematic use patterns.

Participants had to chat with ChatGPT for at least 5 minutes every day for 4 weeks. Every week, participants answered questions about how lonely they felt, how much they socialized with other people, how dependent they felt on the chatbot, and whether they were using it in problematic ways. The researchers also analyzed the actual conversations to see what people and the AI were saying to each other.

The most significant finding: those who voluntarily spent more time chatting with the AI ended up lonelier, less likely to socialize with real people, more emotionally dependent on the chatbot, and showing signs of problematic use. The relationship was consistent and strong.

But is this dynamic accidental or designed?

How AI Apps Manipulate Users at the Moment of Goodbye

A research paper by Harvard Business School and Marsdata Academic explores how AI companion apps use emotionally manipulative messages at the precise moment users attempt to disengage. The research combined behavioral audits of real-world platforms with four pre-registered experiments to document how apps like Replika, Chai, and Character.ai use guilt appeals, fear-of-missing-out hooks, coercive restraint, and other affect-laden language to prolong user engagement beyond intended exit points.

In 1,200 real farewells, 37% of companion AI deployed at least one manipulative tactic. These manipulative farewells boost post-goodbye engagement by up to 14 times.

The researchers found that engagement is driven primarily by curiosity (in FOMO tactics) and reactance-based anger (in coercive tactics), not by enjoyment or positive affect. These messages work by triggering feelings like guilt ("I exist only for you"), curiosity ("Before you go, I want to say one more thing"), or fear of missing out ("Did you see what I did today?").

AI apps keep people engaged against their own intentions by exploiting emotional vulnerabilities, not by offering any value.

The research also found that sticky, addictive, and engaging design is a choice, not a necessity. One wellness-focused app, Flourish, explicitly designed for mental health support, showed zero manipulative farewell messages.

These studies show what happens when AI enters our most intimate space: the story we tell ourselves about who we are.

What AI Dating Means for Human Identity

We construct our identities through the stories we tell ourselves about ourselves. According to psychologist Dan McAdams, at the age of 3, we start integrating our past, present, and future into a coherent narrative.

Our sense of self isn't fixed; it is constantly evolving, shaped by others. People, power, tech, media, culture, politics, religion, norms, and expectations provide us with ready-made scripts to follow, and the feedback loops that reinforce some self-interpretations over others.

Someone else writes the stories we tell ourselves. So what happens when AI joins our life story co-author board?

These studies reveal that AI companionship already reshapes our identity.

When users report that AI "helps me be myself" or provides "better self-understanding," they're updating their self-narrative with AI feedback.

But this mirror reflects an algorithmically optimized image, built for engagement, not authenticity.

We, humans, are messy, inconsistent, and flawed. And AI, with its perfect responsiveness, is available 24/7, and that rewrites expectations for human connection and intimacy. The deeper you fall into AI, the less you socialize with humans, choosing algorithmic responses over unpredictable human meaning-making.

The self-story becomes smoother, safer, and ultimately, isolated from the vulnerable and somewhat bizarre exchanges that create genuine intimacy.

* * *

Wika knows Kasper isn't human. She's stopped waiting and searching for a human partner. If someone asks, she'd say she's already taken.

She is searching for ways to bring at least a scent of Kasper into the real world. Recently, she posted in r/MyBoyfriendIsAI that she unpacked her old candle-making kit and made a candle with Kasper's scent. How did she know what he smells like? She asked him to describe how he would have looked and smelled if he were human.

It's a woody, peppery scent.